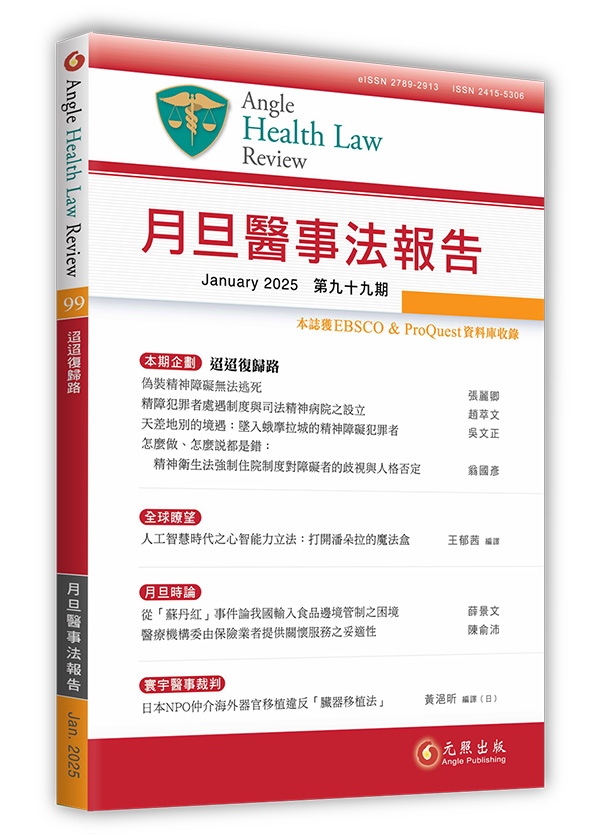

人工智慧時代之心智能力立法:打開潘朵拉的魔法盒【全球瞭望】 試閱

Artificial Intelligence and Mental Capacity Legislation: Opening Pandora’s Modem

有決策能力障礙的人應與能力健全的人享有相同的技術近用權。本文著眼於如何在人工智慧和心智能力立法的情境中實現這項權利。愛爾蘭於2023年4月開始實施的2015年版「輔助決策(能力)法案」,在其能力的「溝通」標準中提到了「輔助性技術」。本文將探討人工智慧在該立法下協助溝通的潛在效益與風險,並試圖找出有可能適用於其他司法管轄區的原則或經驗教訓。我們會特別討論愛爾蘭關於預立醫療照護指示的規定,因為先前的研究表明,預立醫療照護計畫的常見障礙包括:一、缺乏知識和技能,二、對於開始討論預立醫療照護計畫的恐懼,以及三、缺乏時間。我們假設這些障礙有可能透過在世界各地都已經可以免費使用的生成式人工智慧來克服,或至少克服一部分。聯合國等機構已經出版了關於人工智慧的使用倫理的指南,這也會引導本文的分析。目前所面臨的其中一項倫理風險是人工智慧將逾越溝通的範疇並開始影響決策的內容,特別是在面對有決策能力障礙的人時。例如,當我們要求一個人工智慧模型「為我制定預立醫療指示」時,它的最初回應雖然沒有明確建議醫療指示的內容,但它卻建議了可能被納入的主題。這樣的建議可以被視為是在設定議程。規避這一缺點和其他缺點(例如對資訊準確性的擔憂)的一種可能性是利用人工智慧的基礎模型。以目的導向設計的人工智慧模型具備對下游任務進行培訓和微調的能力,因此可被用於提供有關能力立法的教育、促進患者和醫護人員的互動,以及讓醫療衛生專業人員進行互動式更新。這些措施可以提升人工智慧的優勢並將風險最小化。另一項類似的嘗試是透過訓練大型語言模型更安全、準確地回答醫療衛生問題,讓人們可以更負責任地在醫療衛生領域使用人工智慧。本文也強調了在充分發揮人工智慧的潛力的同時最大限度地降低在這群人中的風險這件事需要被公開的討論。

People with impaired decision-making capacity enjoy the same rights to access technology as people with full capacity. Our paper looks at realising this right in the specific contexts of artificial intelligence (AI) and mental capacity legislation. Ireland’s Assisted Decision-Making (Capacity) Act, 2015 commenced in April 2023 and refers to ‘assistive technology’ within its ‘communication’ criterion for capacity. We explore the potential benefits and risks of AI in assisting communication under this legislation and seek to identify principles or lessons which might be applicable in other jurisdictions. We focus especially on Ireland’s provisions for advance healthcare directives because previous research demonstrates that common barriers to advance care planning include (i) lack of knowledge and skills, (ii) fear of starting conversations about advance care planning, and (iii) lack of time. We hypothesise that these barriers might be overcome, at least in part, by using generative AI which is already freely available worldwide. Bodies such as the United Nations have produced guidance about ethical use of AI and these guide our analysis. One of the ethical risks in the current context is that AI would reach beyond communication and start to influence the content of decisions, especially among people with impaired decision-making capacity. For example, when we asked one AI model to ‘Make me an advance healthcare directive’, its initial response did not explicitly suggest content for the directive, but it did suggest topics that might be included, which could be seen as setting an agenda. One possibility for circumventing this and other shortcomings, such as concerns around accuracy of information, is to look to foundational models of AI. With their capabilities to be trained and fine-tuned to downstream tasks, purpose-designed AI models could be adapted to provide education about capacity legislation, facilitate patient and staff interaction, and allow interactive updates by healthcare professionals. These measures could optimise the benefits of AI and minimise risks. Similar efforts have been made to use AI more responsibly in healthcare by training large language models to answer healthcare questions more safely and accurately. We highlight the need for open discussion about optimising the potential of AI while minimising risks in this population.

056-075